3.9.2021

Is Natural Language Understanding ready for Consumer app use cases?

There seem to be two emerging camps in today’s Natural Language Understanding (NLU) community building consumer apps. One camp is building basic interfaces on top of fairly simplistic use cases of GPT-3 and by putting it behind an app or website pretending that it’s AGI. I’m not bullish on these use cases for reasons I’ll describe below.

The second camp is re-thinking what it means to have a UI when you can understand and generate language in response. This group of software developers, many of whom presented at our GPT-WHAT?! event at Betaworks are not simply making chatbots, they’re thinking about NLU as a feature of solving a separate problem, often hiding the NLU in places where it’s not even apparent NLU is happening, but the results can be magical. For example, Ought.org is using a search input to generate multiple sub-searches and then running those through google to generate search results that may be more on point than what the person actually intended to research. Helping someone be creative and telling them you’re doing it is one thing, but helping someone be creative in pursuit of a specific outcome, and not even letting them know that’s what’s under the hood? That starts to feel like some Harry Potter magic.

🌊 Is NLU a Platform Shift?

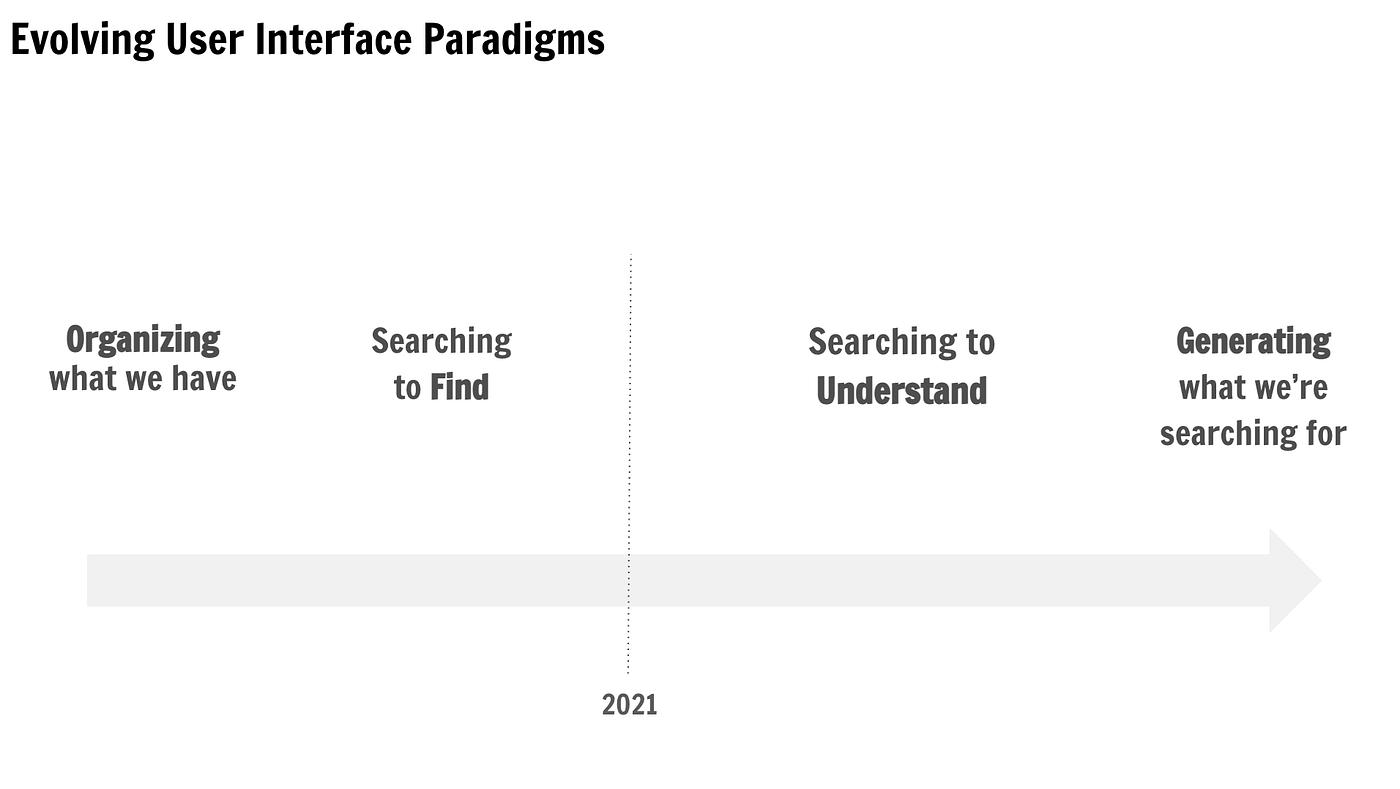

One way to view Natural Language Understanding is to think of it as a technology shift on top of which new consumer apps may or may not be built. In that way, conversational software is part of the evolving UI of consumer tech. This evolving UI started with the web becoming social (e.g., Facebook, Tumblr, Kickstarter) then that set of social interactions moving to mobile (Twitter, Venmo, GroupMe). Through that lens, we at betaworks have been asking ourselves what’s the next evolution of consumer User Interfaces?

One emerging interface is audio — powered by a UI shift to always-in Airpods and with apps like Anchor and Clubhouse powering great consumer experiences. A second emerging interface is synthetic reality — powered by a shift to live streaming games starting with Fortnite but which some say will lead to an inevitable metaverse.

There are other new interfaces but one common thread is that there is an opportunity for Natural Language Understanding to play a material role in the services that live inside these new interfaces.

💭 Metaphors

So much of technology’s power is derived from the metaphors we use. The value of Windows and folders was that we could say, “here is where my games are, here is where my documents are, here is where my contracts are in this case.” A digital filing cabinet helped us organize and get the most out of our emerging digital world.

Once the number of files and websites grew too large, we moved from organizing what we had to searching through the plethora of files. find We had so many virtual spaces and places and digital exhaust that our metaphors had to fundamentally change from organization to search.

Search to find is becoming search to understand. Right now that understanding is really rudimentary:

NLU is helping us evolve from asking the question where is the document and instead what does the document say? In order to get there, we have to be able to derive some level of meaning. This means going from finding a document that has the title or key word “non-disclosure agreement” to being able to ask questions of our documents. Searching to understand means being able to ask questions such as “how long does the NDA with so-and-so last?” and to get an answer as clear as “there are 8 ounces in a cup.”

This is the future, but it is not the end state.

👩🎨 Moving from auto-complete to auto-create

If we’re able to search for understanding, it means it’s possible to generate something new as the result of what we were searching for. Today, most examples of text generation look like some form of auto-complete. The most creative uses of generative text are around what amounts to style transfer: 10 different ways for Alexa to tell you what time it is: telling you what time it is, but in manner that is sarcastic or pithy or funny.

Moving past this uncanny valley-ish stage means using what we will have built when we searched for meaning to move forward generate responses from documents that haven’t been created yet.

🤖 The UI of AI

Or, “wait — isn’t the point of Artificial General Intelligence to be its own UI?”

Between 2010 and 2015, you could have looked at self-driving cars and said based on the progress made in computer vision, it’s inevitable that in the next five years the streets will be filled with self-driving cars. One of two things didn’t happen: either we just needed more data and bigger models or we needed a step function improvement. NLU is similar.

So we ask the question of NLU — Is this a problem solved by having more data? Or a step function level improvement? Word2vec was a step function level improvement that enabled neural networks to process language productively. With GPT-3, we found that adding more data created a brand new way to design a UI and all it needed was more hyperparameters. My sense is that we’re not going to iterate our way to general intelligence, we’re going to have to invent our way there.

In addition to a consumer UI, it’s also worth asking the question what will developer interfaces look like? Arguably the biggest benefit of OpenAI’s GPT-3 is that it changes how people program AI. In order to get GPT3 to generate text you have to create a prompt. Maybe “good prompt design” is a job of the future, something like what is emerging today for “no-code” developers.

🔭 Searching to Understand

Examples

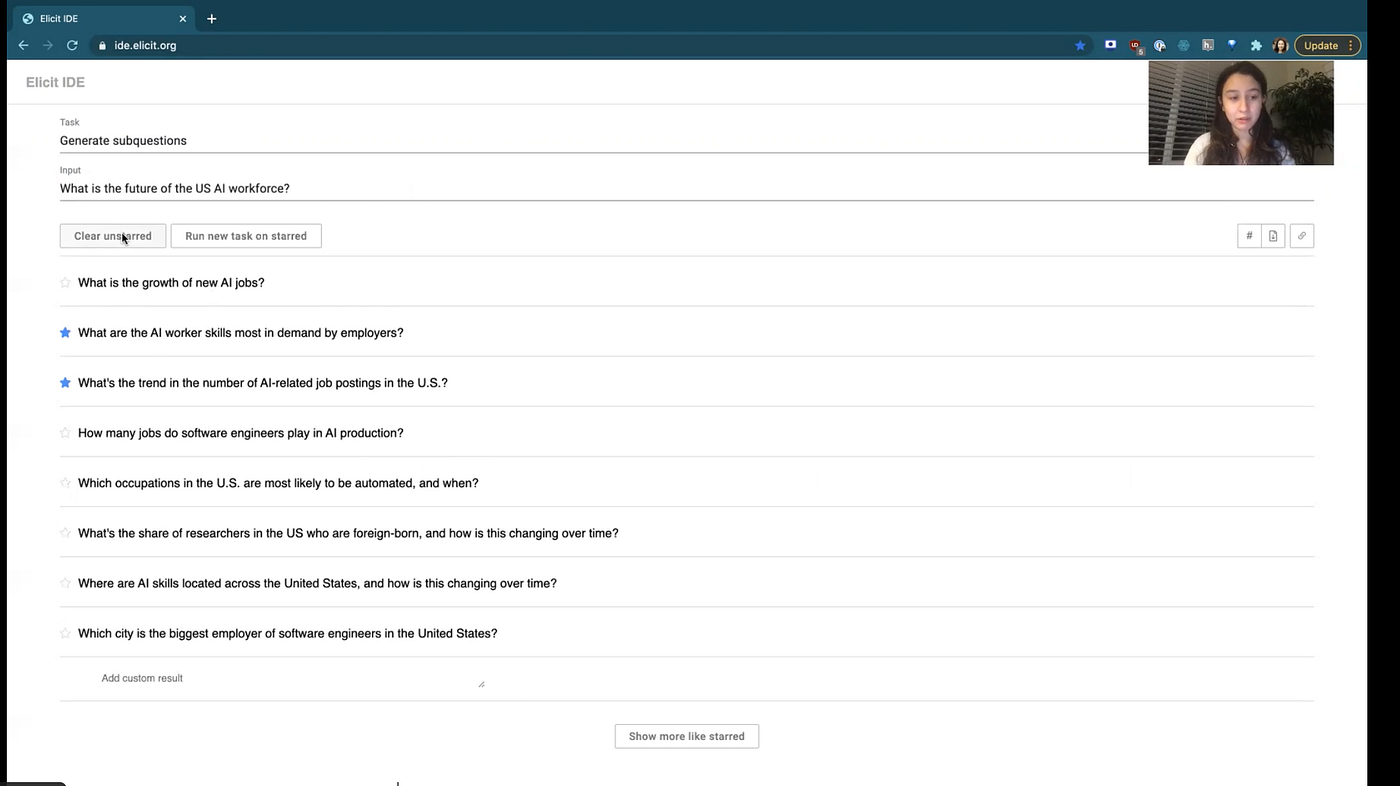

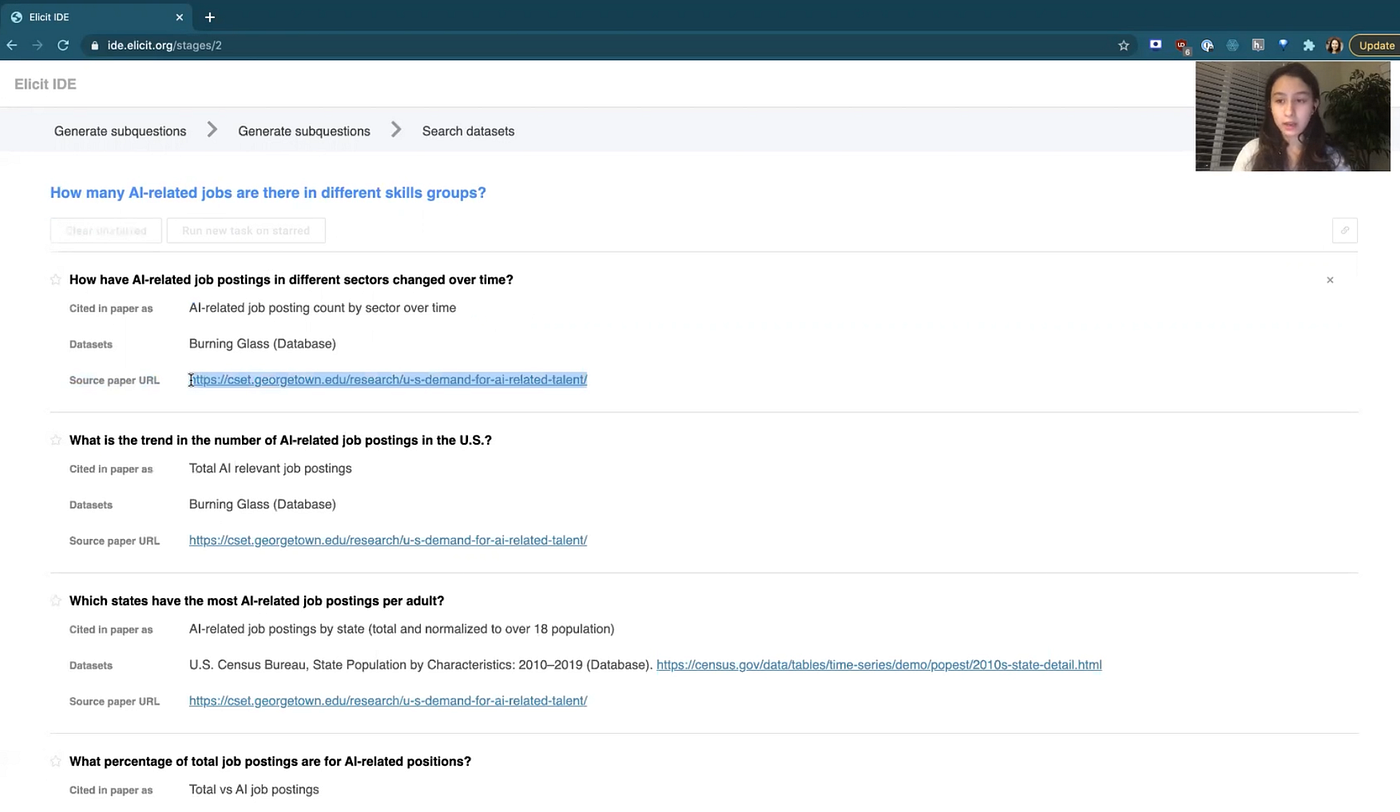

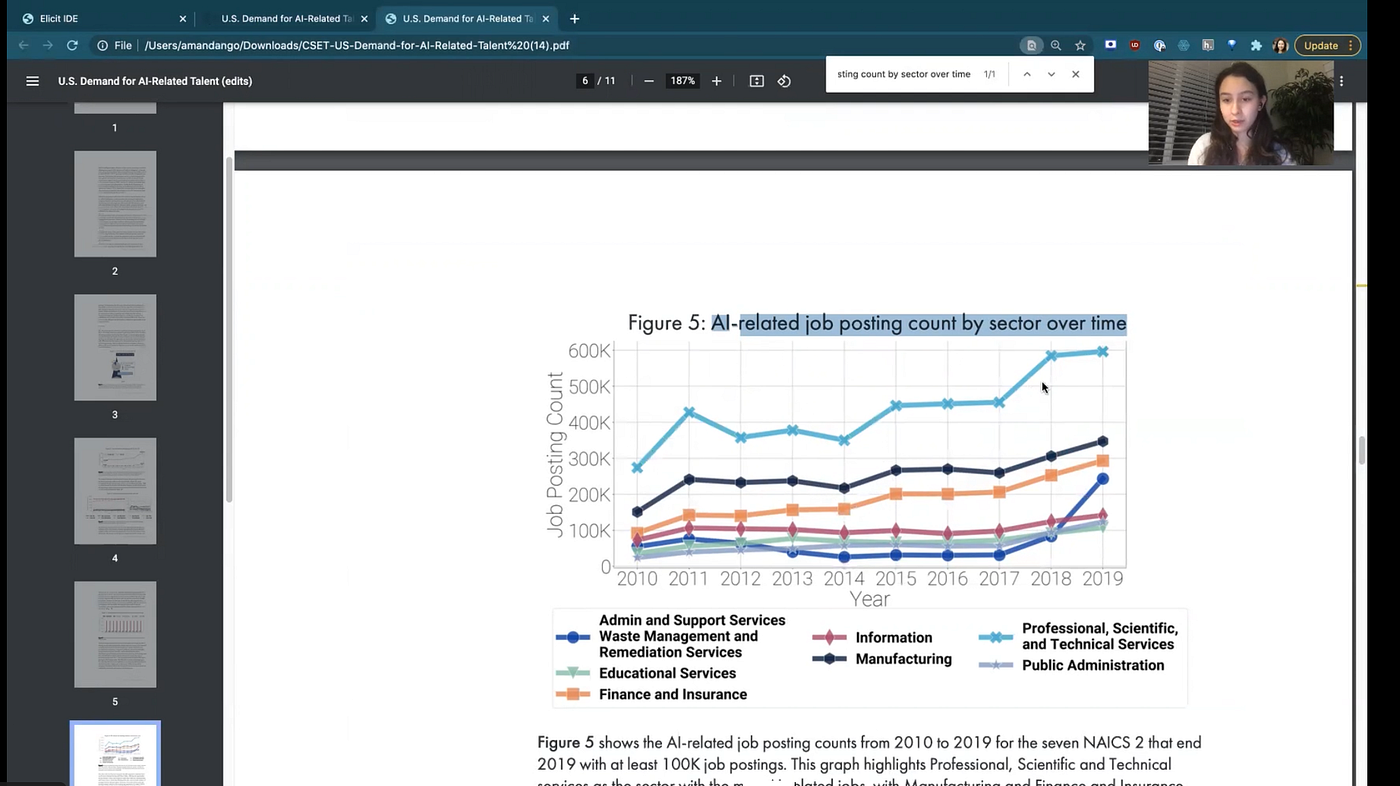

A great example of searching to understand is from Elicit, which Amanda Ngo presented at the betaworks GPT-WHAT event. Shiraeisenberg described it as “Roam Research meets Google” and it falls into a framework Kanyi Maqubela called “superpowers” in this case, superpowers for researchers. Elicit is an AI research assistant that lets people input a research question and uses Natural Language Generation to generate more subquestions that are related, which the researcher can then select. Once all subquestions are selected, Elicit scours and returns relevant publications. Perhaps most interestingly, Amanda showed how you can dive into the specific publication to find the actual subquestion answer you were looking for:

This is a great example for two reasons. First, it’s a concrete example of how NLU can co-create with human beings. Second, it’s an outgrowth of a project which helps people elicit positive reframing of negative thoughts. Note that the reframing use case might sound like more of a toy for entertainment, but the very same technology has high utility as a superpower for researchers. I think we’re in the “toy” phase for many of these products, but that similarly, they will have high utility cousins.

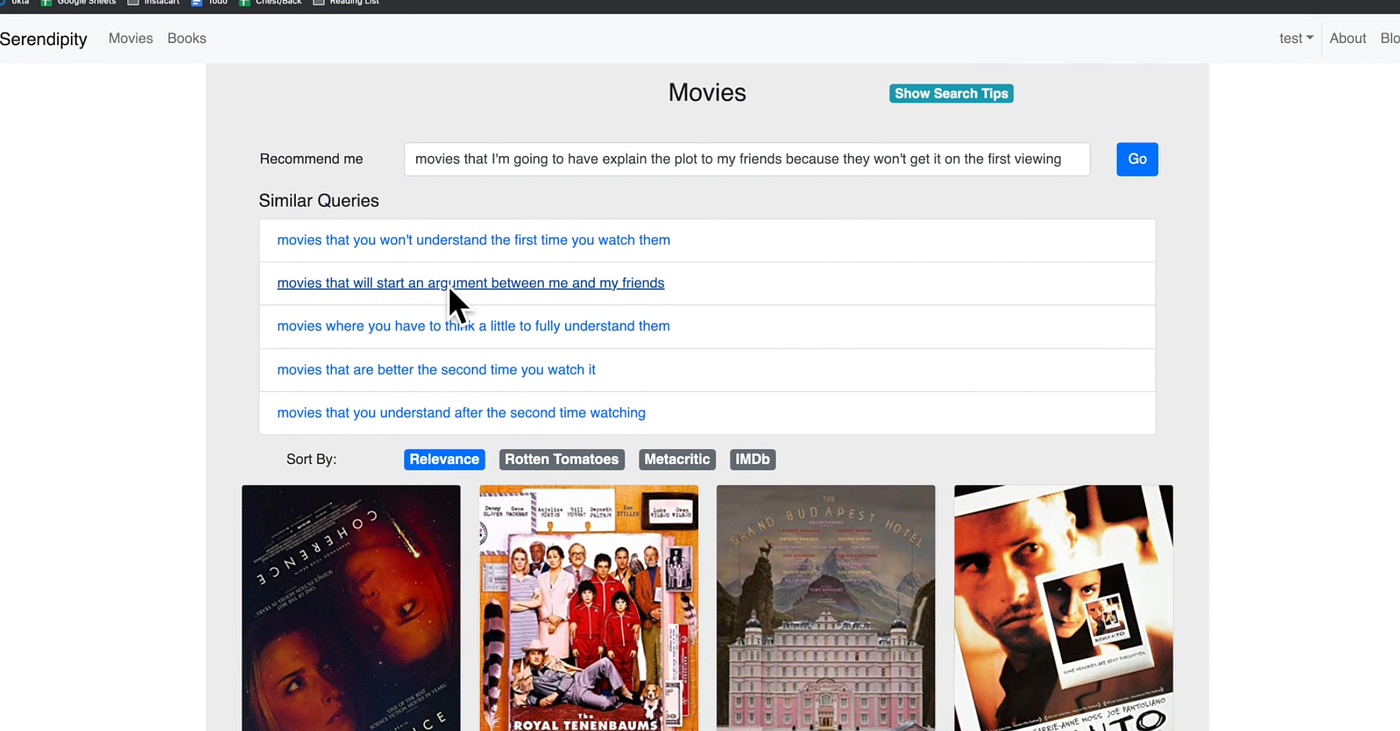

Searching To Understand is also being applied to go from one media type to another. For example, James Zhu shared a new search interface for using natural language to find movies (and books) of a mood or feeling that google typically doesn’t index:

Understand search in movies and books as in the examples above, can start to make things feel pretty magical for a user.

Giphy has also used this approach of taking text and ultimately outputting an animated gif. In this case, they used the concept of word2vec and created a gif2vec model:

🔨 Generating What We’re Searching For

Examples

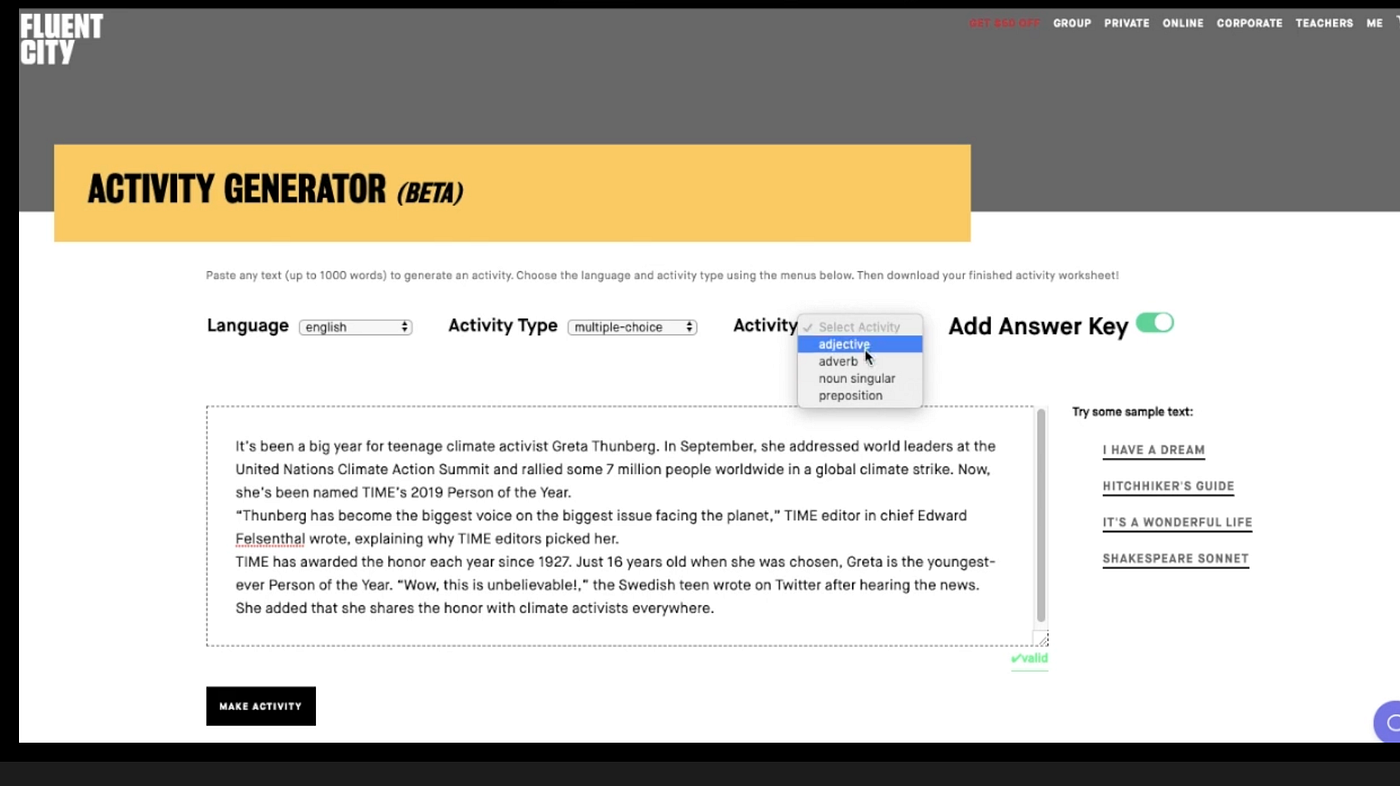

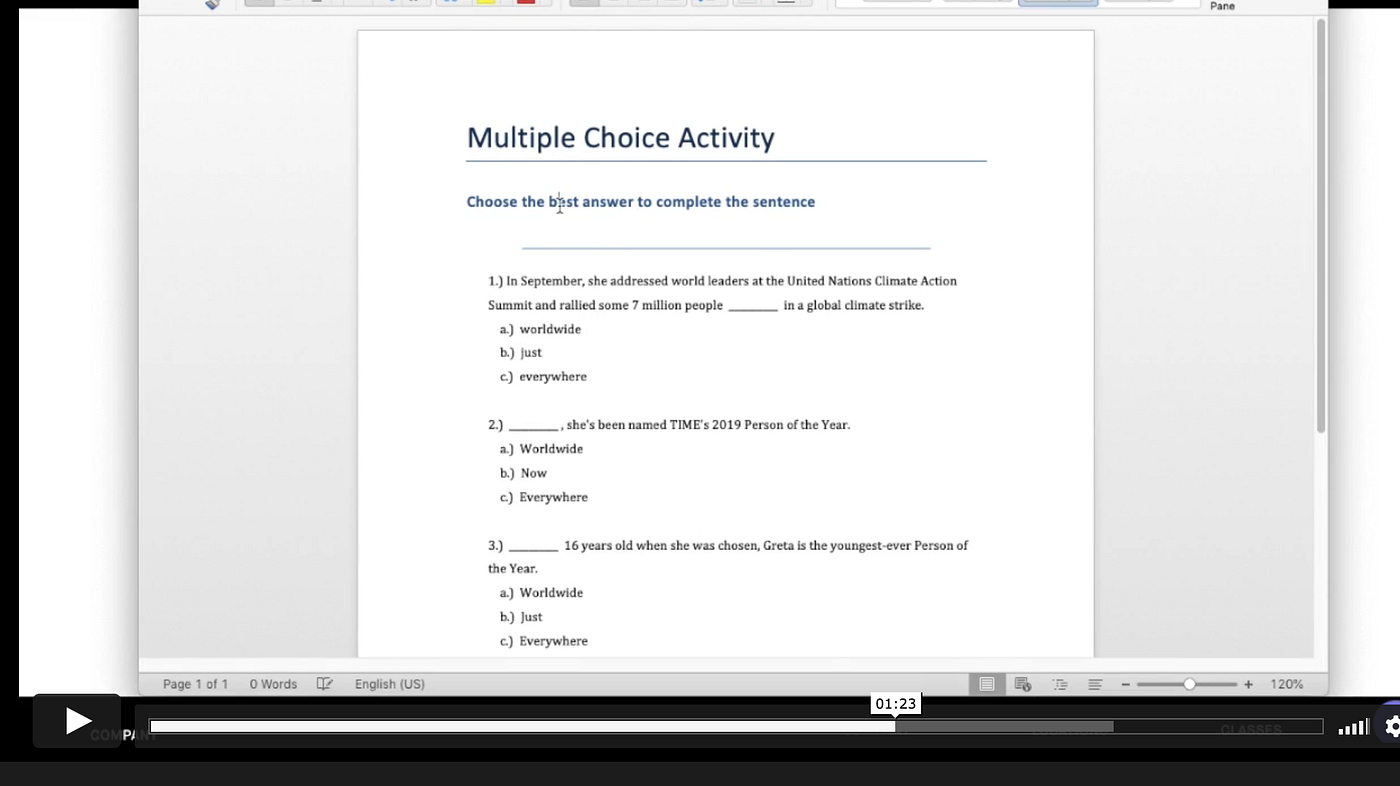

Below is Fluent City’s Activity Generator, which gives teachers the power to instantly create custom activities from virtually any text they want to use with their students:

Co-creation doesn’t have to be a single back and forth with a single moment of output. Hilary Mason Mason shared her thinking on how NLP systems can be “full participants” in narrative storytelling experiences in her new product Hidden Door, which lets kids co-create narrative stories.

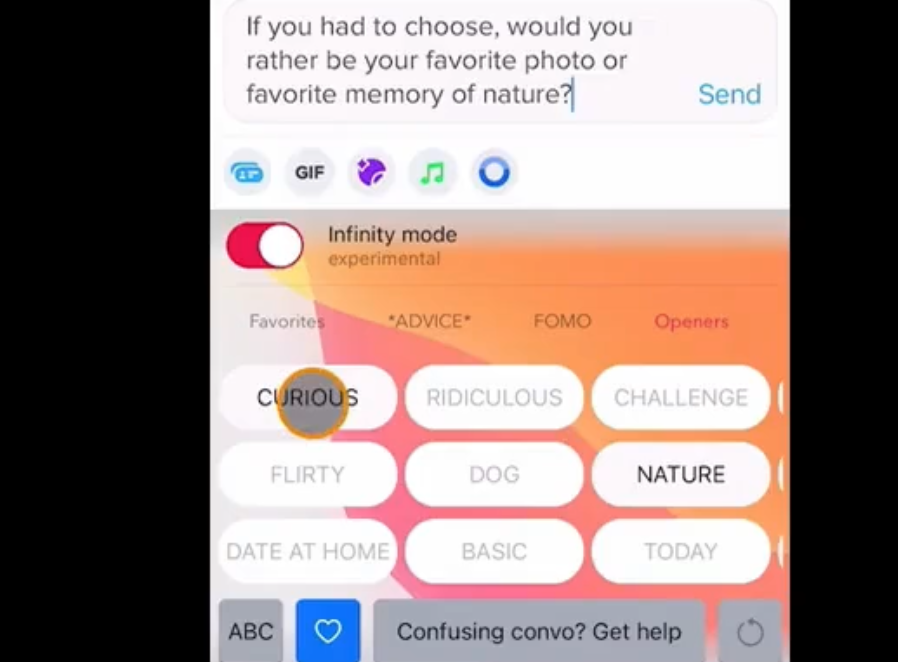

Some people have already started creating functional text message companions whether they’re a side kick or a partner. For example, Taylor Margot shared Keys, which is an iOS keyboard that generates ways to break the ice, whether that’s for starting business conversations or for using in dating apps:

There are also some NSFM (Not safe for medium?) examples, like Juicebox, which has created a text-based erotic fiction experience that aims to help people feel comfortable with sexuality.

Stephen Campbell presented Virtual Ghost Writer, which explores what the UI should look like on top of text completion, answering, and summarization. One thing to note here is that Stephen created this product using no-code software. So when we think about what the best UI is for enabling people to use the latest natural language libraries, it’s also worth considering that an answer may be something that looks like Webflows or Zapier.

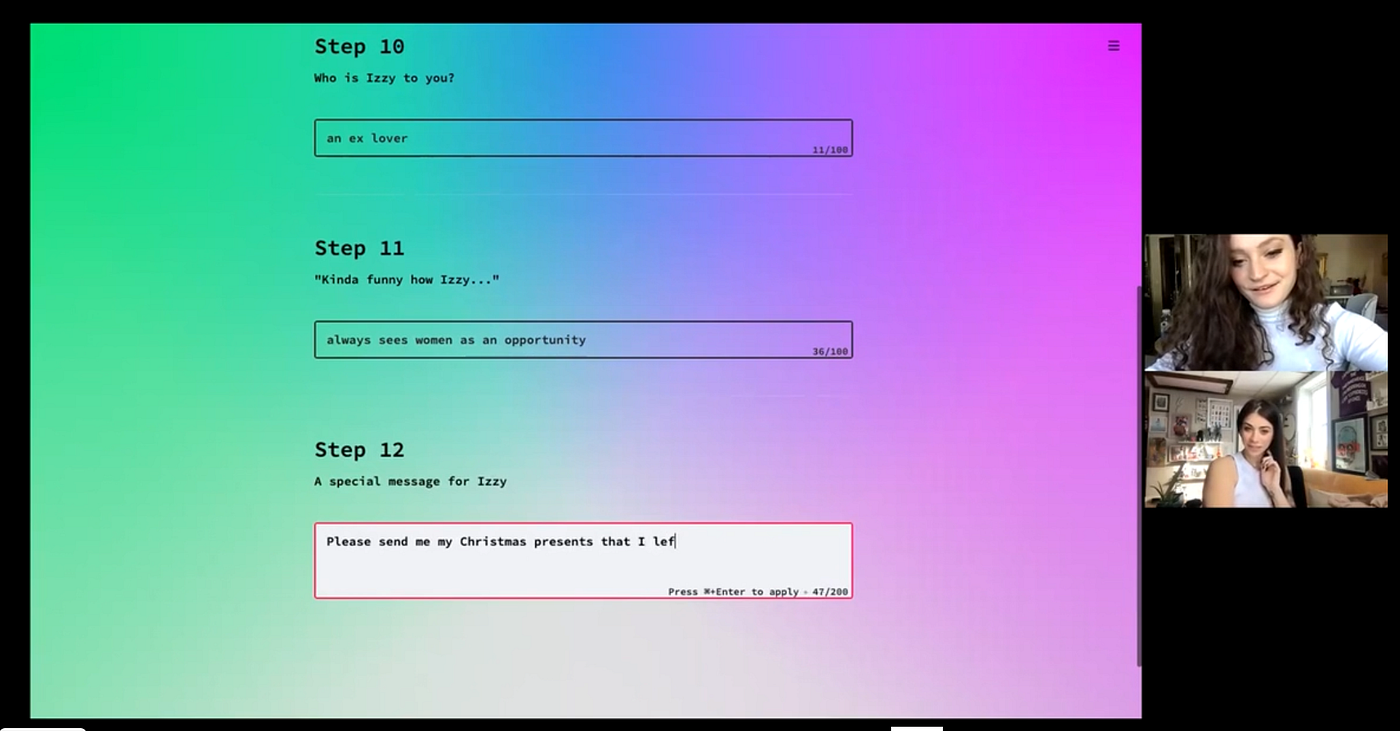

When we think about consumer tech, one common attribute is simply that using the product is fun and worth sharing with friends. Shira Eisenberg created “Songs No One Asked For” which takes a Mad Libs type approach to asking about your life and relationships and generating a set of lyrics. One of the things we pay close attention to at Betaworks is moments of delight in consumer apps. The video of Shira and her friend co-creating the song with her Natural Language Generator created clear moments of delight not just for users but for everyone witnessing the song being written.

🚲 Bicycles For Our Minds

Steve Jobs remarked that there had been a study on which animals could travel a mile while using the least amount of energy (i.e., most efficiently). The condor was #1 and humans were somewhere in the middle of the list. And someone smart measured energy use of a human traveling a mile while on a bicycle, and it blew the condor out of the water. As human beings, our highest and best use isn’t being able to accomplish tasks alone, it’s the ability to build the tools that will help us create our own superpowers. Jobs likened the computer to the highest leverage tool we could create — bycicles for our minds.

However, over the last decade, value often accrued to the tech platforms that could aggregate and monetize attention. That led to a number of perverse incentives such as harvesting data and using behavioral psychology to get people to stay on a site for longer or click another link. We might call that candy canes for our minds — the pure sugar of distraction. We not only did this to each other (e.g., optimized headlines), but the machines evolved to do it to us as well — we trained algorithms to lead us down rabbit holes in the name of one more click or one more moment of attention.

With these new NLU consumer use cases, we have an opportunity to reinvent how we train and use the next generation of machine learning. One of the promises of NLU is that it creates value for the people using it, rather than treatings its users as the product. The example applications above are built to help people, with assistance from NLU. This emerging technology allows us to better understand information and the world around us and is a stark contrast to attention harvesting. In that way, if NLU is truly a platform shift it will enable software developers to help further Steve Jobs’ vision of developing the next generation of bicycles for our minds.

Thanks for reading. You can follow me on twitter @matthartman or find me at matthewhartman.com. I also curate the newsletter “gptWHAT?! with links to interesting tech-related news curated by me and with hot take headlines generated by GPT-3. You can find it here.

If you’d like to see videos from the GPT-WHAT event, text “#NLUvideos” to me at 917–809–7099 and I’ll send you the link.